Engineering design and manufacturing control in the cloud.

When Vmware PC virtualisation started to gain traction in the mid noughties amongst the engineering fraternity, it was a godsend. Prior to vmware, engineers were laboured with PC multiboot configuration hell. It was at best, very difficult to set up a single PC to be able to boot to XP, or NT, or Sco Unix and at worst, it was just hell. But it was too expensive to have a PC with the exact OS hardware & software application configuration for every supported installation or development environment . Vmware changed all that. Now, an OS and application could be installed on a virtual machine, and the resulting file could be copied and moved and run on any powerful hardware. Essentially abstracting away forever, the IBM PC compatible hardware problem, massively improving utilisation of existing hardware in the process..

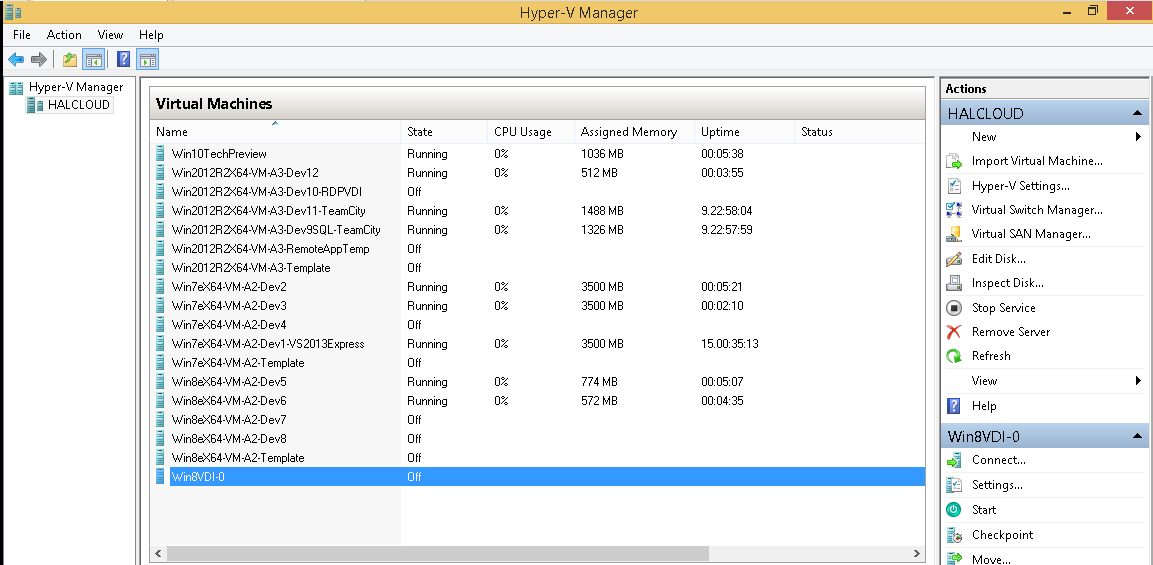

Figure: Private cloud running on 1 PC ; 9 instances of Windows 7,8,10 and Windows server 2012R2 running simultaneously on a PC costing less than 2500 Euro.

Fast forward 10 years, and a highend gaming PC running baremetal windows Hyper-V 2012 can implement an entire PC server and client infrastructure that authenticates seamlessly to pre-existing on-prem active directory services, and whose VMs (virtual machines) are accessibly via RDP (remote desktop prototcol) and all sandboxed within their own virtual network environment. For Automation and IT engineers alike, this is quite simply development and support Nirvana. For their bosses, this is a paradigm shift in unlocking efficient use of their key resources; Engineers.

Where to next?

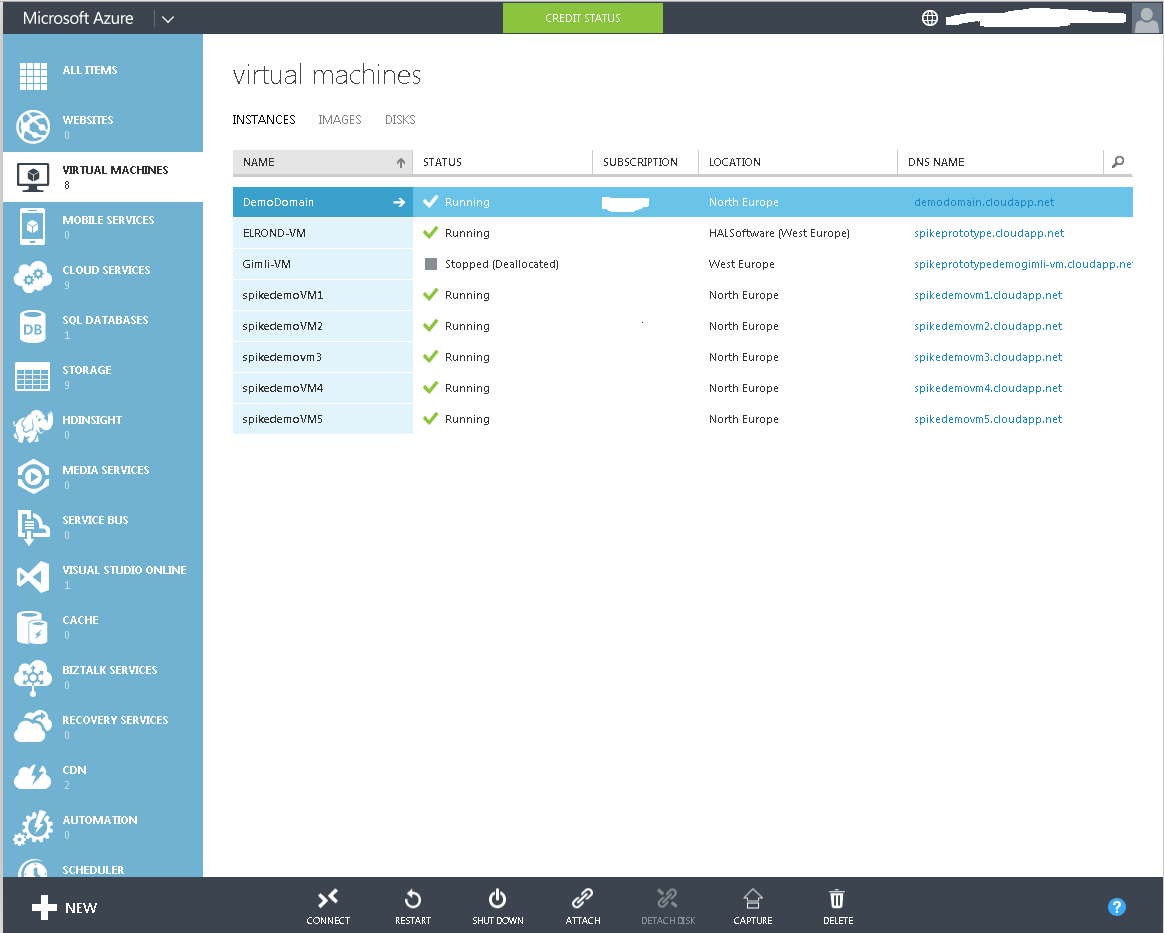

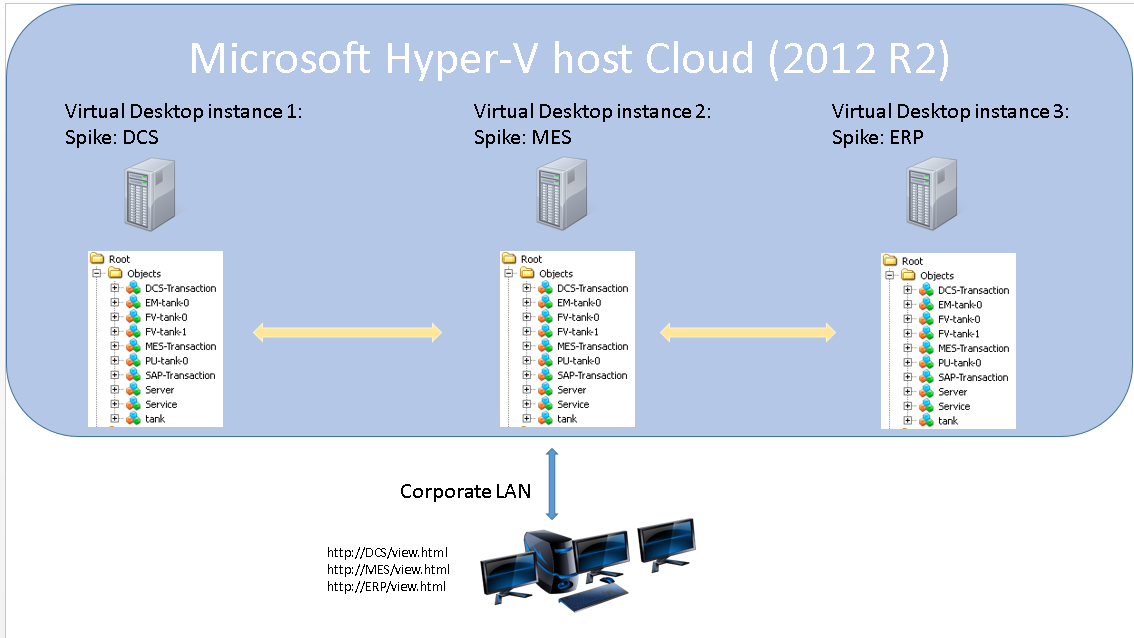

Jan 2015 and a HAL software developed Win 2012R2 VM running HAL Softwares prototyping web server Spike ,is built on the in-house private Hyper-V cloud and image uploaded to Windows Azure public cloud. From there it runs using Azure RemoteApp. Its now available worldwide and logins are authenticated via our own cloud based active directory. But that could just as easily be on-prem active directory (Hybrid directory). Thus in the space of a couple of days, we gave our application global reach and, through Azure, gave our international clients the scaleability and security they demand.

Figure : A birdseye view from the public cloud ; Windows Azure.

Public cloud ; Microsoft Windows Azure

Windows Azure does not yet support Windows ‘desktop-as-a-service’ or single instance single tenant elastic infrastructure. But Amazon and Google (for e.g.) do and the cost is averaging around 20 USD dollars per instance per month for a single vCPU with 40Gb . Theres now a move towards making vGPU processing available in the cloud ; Essentially abstracting away those really expensive workstation graphics cards, such that ACAD and Video rendering applications can now run in the cloud.

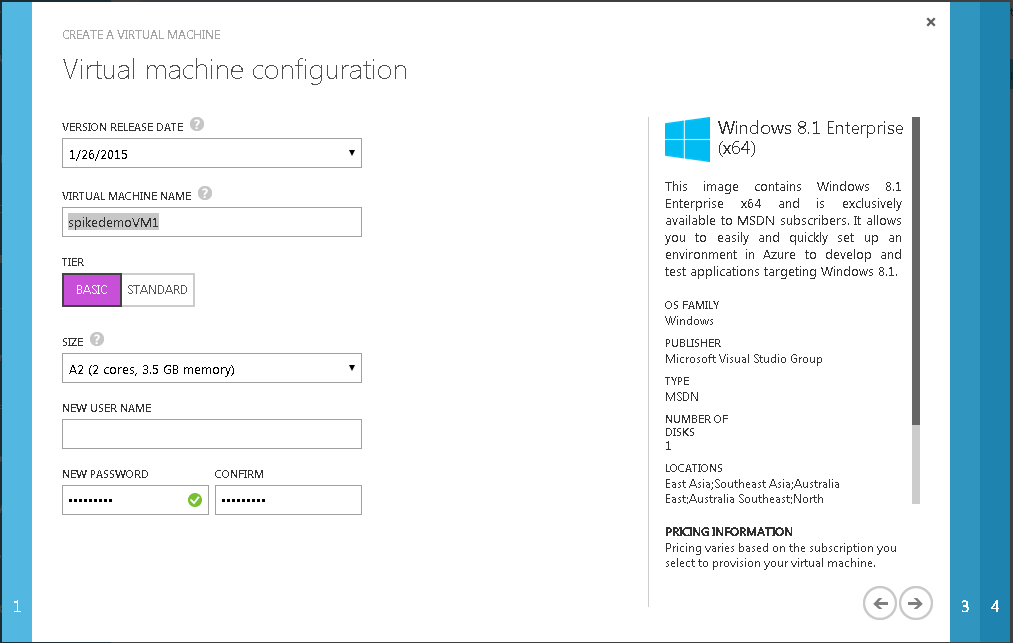

Figure; Provisioning a VM in Windows Azure.

Provisioning a PC in Azure using a built in image is relatively straighforward. Images may only be uploaded for remoteapp (which is by design not desktop-as-a-service), but that may change.

But what does this mean for engineering design in general and what specifically what does this mean for manufacturing automation systems in particular?? We wont touch on cost savings in terms of hardware utilisation and reduced support headcount. Rather we will just focus on what can be virtualised and roadblocks to virtualisation.

General blockers to cloud implementation;

- licensing

Microsoft windows will not license Windows 7 or Windows 8 to anyone else, for public cloud infrastructure. (Amazon windows 8 instances, for eg, are in fact ‘reskinned’ windows 2012 R2.) There is no licensing problem with private cloud infrastructure if you are a client of microsoft and not a reseller.

Software vendors in general do not like virtualisation because of the resulting piracy that it encourages. There is almost no way to protect against license copying through virtualisation. On the other hand, Software as a service models solve the piracy issue. Hence the software vendors drive to get clients onto a SaaS payment model.

- Application design

A lot of bespoke engineering design and control software are not supported on virtualised hardware. Emerson DeltaV is supported on virtualised hardware ; Their own hardware! In other words, DeltaV needs its own unique private cloud. So its going to be difficult to argue that any headcount or capital cost can be saved in this case.

Rockwell Automation reserve the right to say ‘try and fix it on real hardware first before contacting us’ as evidenced in the following Vmware/Rockwell Automation support statement;

“While Rockwell Automation does not insist customers recreate each issue without VMware before contacting support, we reserve the right to request the customer diagnose and troubleshoot specific issues without the VMware “variable”. This will only be done where we have reason to believe the issue is directly related to VMware. While functional problems have proven to be rare under VMware, problems related to performance and capacity are much more commonly reported, particularly with enterprise-class applications that recommend highly configured, dedicated systems for deployment”

It goes without saying that it is a lot of work to’remove’ virtualisation from the equation. (Back to the bad old days of custom built support rigs.)

A modern application that runs on Windows 8.1 or server 2012R2 is virtualisable out of the box; Both of these operating systems are designed from the ground up to be run in a hyper-v cloud and have what is called ‘dynamic memory’ ; the OS can release unused memory or request more memory on the fly, making for a very efficient cloud. However legacy operating systems (windows 2008 and older) cannot do this.

- Application components;

Any application with a non standard hardware component is not going to be easily virtualisable (eg a USB application license key, or profibus/profinet PCIE communications card). Its easy enough to associate the USB key with a VM, but that VM must then always be bound to particular physical hardware, and thats an impossibility in the case of the public cloud where VM execution is continuously shunted from one rack to another. In the case of the profibus card, there is going to have to be a dedicated hardware PC to house the card and its software server component. Not all applications can implement this.

- Performance;

In the authors experience, for a test database server, a virtual server running on bare metal Hyper-V (ie the fastest HyperV implementation) was approx 20% slower than the equivalent implementation on a physical machine. A 20% performance overhead may or may not be deemed acceptable.

Network performance may be a more critical issue; Specifically, deterministic network performance. EtherCAT protocol implements deterministic control over ethernet hardware, but requires a dedicated ethernet network ,an Ethercat network Master,and a dedicated ethercat chip in the PLC slaves. However Any Ethernet device (for eg a VM with its own dedicated physical network card) can be connected within the EtherCAT segment via switch ports. The Ethernet frames are tunneled via the EtherCAT protocol. The EtherCAT network is fully transparent for the Ethernet devices, and the real-time features of EtherCAT are not disturbed. This Hybrid control system can have virtualised servers, but with realtime slave PLC peer to peer communication.

The Engineering design process ; Aspects open to virtualisation.

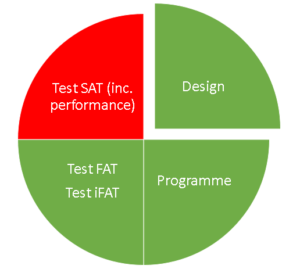

Most of a control system design ,development and testing can be virtualised and there are real saving and also collaboration advantages to doing this within a virtualised network in a public cloud. SAT testing , where ‘the rubber meets the road’ in the factory floor still needs to happen in the real world and performance testing is now more important than ever.

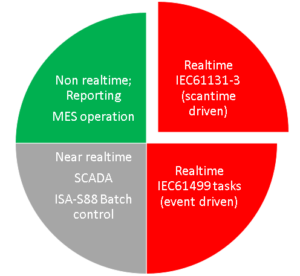

(Figure; Green – good for virtualisation. Red – cannot be virtualised)

The virtualised engineering design office of the future will perhaps look like this;

figure: Automation Engineering applications available in the cloud

The picture depicts development environment instances of all the major suppliers virtualised and available worldwide on demand (ie dynamic provisioning desktop-as-a-service) in the cloud.

There are a number of roadblocks to this implementation presently;

- Licensing.

Major control system vendors will not agree to this license implementation until forced to, by major clients or by the market simply turning away from their products.

The only network protocol available in the cloud is Ethernet TCP/IP. Not Profinet, not Profibus and not ethernet/IP. Not a big deal for 80% of the development and testing. But final binding to remote IO cannot be virtualised.And performance tuning is going to be a lot more difficult.

- Unified address space.

Up to now, large DCS system providers have had the best global controller address space implementation that provides for easily configured and transparent communications between controllers. Rockwells Controlnet implements a similar global address space .(whereby all global controller tags are unique across the network no matter how many controllers, making communication easier). Now there is another alternative; OPCUA. Whether OPCUA can provide the same level of performance and robustness (failed comms identification and retries etc) as these proprietary protocols remains to be seen. Its possible that a combination of an Ethercat network master , bespoke message queue / transactional functionality and gigabit ethernet control stacks (such as ethercat) will remove the problem.

- Insufficient takeup of ‘OPEN’ control system programming standards.

This is not strictly a blocker in that we are not yet talking about virtualising controllers, but rather abstracting the code development and maintenance to the cloud and making it independent of controller type. It will still have to be downloaded to a PLCopen (for e.g) compatible realtime controller on the factory floor. But when we have finally virtualised PLC / Controllers, then we are truly in play for rapid control system development and maintenance.The cloud will be like one great big DCS whereby its a case of downloading the cloud based 61131-3/61499/OPCUA logic to the new PLCOpen compatible replacement controlller.

PC’s were virtualisable because of the IBMPC compatible standard. PLC’s have no such standard. The main players ; Siemens, Rockwell, Emerson..all have their own proprietary platforms , charge a premium, and want to keep it that way. Increasingly however, there is a trend towards common operating systems from WindRiver or CoDeSys for smaller vendor PLC platforms ;Notably Bosch, Beckhoff, ABB. And these common operating systems are starting to accept IEC61131-3 compliant PLCOpen XML code. PLCopen source code can be exported from a number of programming environments. Newer industries have adopted these newer open control platforms (eg windpower)

And if the roadblocks are removed..then what?

Once Engineers start to become comfortable with development and testing in the cloud (private OR public), then migration of actual manufacturing controllers to virtualised PC in the cloud may be back on the agenda. Why? Because if its in the cloud, then the data is easily accessible; via OPCAUA or via WebAPI. And if the factory floor data is accessible via WebAPI then the fashion gurus within the IT industry are going to start talking about Big data…and Enterprise IoT.. and Deepminds style AI heuristics that can ‘play’ their datasets to route out patterns and inefficiences etc .. The point is, that though the engineers on the ground may not see the advantages, that doesnt mean the senior management havent bought into yet another new tech paradigm shift. So the engineer at the coal face has got to be ready for it even if it doesnt make sense from his standpoint.IT tech shifts gather momentum and become self fulfilling prophecies.The move to cloud is an irreversible tech shift of tectonic proportions.

Manufacturing Automation components open to virtualisation

Lets start by examining what manufacturing automation tasks are truly realtime. Because this is the guideline for what may be virtualisable. The ‘virtualisation friendly’ control system of the future allows the programmer to decide from the outset whether his task requires realtime capability (and thus must be compiled and downloaded to a realtime controller such as Beckhoff Twincat system) or does not require realtime execution, and thus can be programmed in a high level language such as C# .NET, thereby reducing realtime controller requirement, and improving engineer productivity in the process. The ‘grey area’ identified in the diagam is the subject of much debate. Legacy SCADA system architecture will eventually be replaced by webservers for one simple reason; Cost. There is no client license cost in a SCADA webserver.You can have unlimited clients. In addition, internet software development has resulted in Webserver performance outstripping SCADA systems through the use of javascript libraries and technologies such as realtime SignalR and WebAPI. S88 Batch control systems are , even today, largely PC based already, with an element of the batch (normally the ‘phase’), resident in a realtime controller. So they are a good candidate for virtualisation provided the controller- to-virtualPC network link uses modern event driven status update technology (such as OPCUA). MES systems have always been a good candidate for virtualisation as they normally don’t have realtime requirements; They just need to be notified of change of plant floor status.

(diagram key; Red – cannot virtualise. Grey – debateable. Green – Can virtualise)

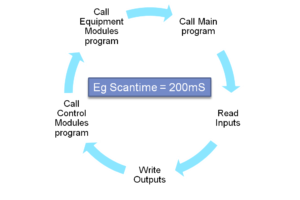

A word on realtime control and why it cant be virtualised;

PLC programming module execution is still mostly cyclically driven (i.e. execute a complete program, then read IO, then execute the complete program again, in a specified time), utilising a PLC scan time

that executes all modules in sequence, and then reads/writes to IO. PLC operating system is considered hard realtime with hard interrupts as opposed to ‘soft realtime’ systems like Windows. The ability to guarantee response within a specified time period is at the cornerstone of realtime system design, and this is why C++ has always been used to code realtime programs and manually manage memory with malloc(). In reality, event driven systems can perform just as fast , but speed is not the determining factor. High level languages are largely (excepting realtime C ) event driven systems that are not ‘deterministic’ and thus presently deemed unsuitable for any safety related functions.

However!..

A large part of a modern S88 style control system is procedural control as opposed to equipment control related, and this part of the software can be provided by a higher level language. (Unit operations, Unit procedures, Procedures some phases etc) and can be virtualised. The Batch Recipe engine can be virtualised.

In a PLC-SCADA-Batch implementation, the Batch server (which stores the control recipes) generally runs on a separate independent PC, and communicates with PLCs(which implement S88 state phase logic) via OPC, so it is virtualisable provided the OPC driver supports NAT. However DCS implementations are more complex ; Its often a case of virtualise all the DCS components or nothing.

(A system capable of expliciting defining, mixing and matching realtime and non realtime activities within the same development environment is Beckhoff Twincat which is based on visual studio 2013 ; Assemblies from different programming languages can be referenced, thus providing this level of flexibility)

PLC standard IEC61499 is a move towards event driven programming and an abstracting of control modules such that it is less important what PLC / PAC the code modules are executing on and there is greater flexibility in terms of execution order. This model is common already in high-end DCS systems such as Emerson DeltaV.

To 2020 and beyond; New ‘virtualisation friendly’ Automation application architecture

Once you have decided which systems are virtualisable, then it’s a matter of visualising the system requirements initially (by developing a prototype, for e.g.) , and then finding a vendor that will roll in behind those requirements. Its too late to approach a prospective vendor with virtualisation requirements when he knows he has already made the sale.

Modern Control application architecture virtualisation-friendly guidelines;

- Native X64 application. .NET 4.5 or greater. Truly multithreaded and capable of utilising multicore boxes and more than 4Gb RAM. Don’t take this for granted. Most SCADA & Batch systems that the Author has programmed were coded for X86 , using Win32 & DCOM and simply don’t scale well.

- Runs on windows 2012R2 and authenticates to active directory.

- Implements sufficient CyberSecurity ;(See recent article on same at hal-software.com) The applications themselves should be built from the ground up as verifiable code and implementing best practices such as strong assemblies in .NET code . Trials and Guidelines for implementing The U.S. SKPP (Secure kernel protection profile) or EURO-MILS equivalent specification on commercial software development platforms are needed.

- Has no proprietary hardware component (eg license dongles or profinet cards)

- SCADA style functionality to be based on webserver platforms ; It really helps virtualisation , and redundancy / scaleabliilty implementations if the application implements 3 tier web architecture whereby the database server is separate from the webserver which is separate from the data collector server. Companies such as Inductive automation are leading the way in this new SCADA architecture.

- For true control network integration, the control system needs the ability to dedicate a gateway VM to a physical network card that is on a control ethernet network such as Ethercat.The cloud needs to provide this capability. Spoiler alert!. It is the authors understanding that neither VSphere nor Hyper-V provide this capability.(Unlike USB devices, it is not possible to connect a host’s network adapter directly to a guest virtual machine. However, you can use the network adapter of the host only with guest virtual machines – effectively exclusively allocating the VM.) This is a key stumbling block to virtualising part of a controlsystem.

- NAT capable network traffic. If a vendor is embedding proprietary algorithms into ethernet network protocols in order to guarantee delivery / quality of service (deterministic control network) then its not virtualisable.

If you want to see what a new next generation SCADA, Batch or MES server is going to look like, then look no further than;

Yes this is only a prototyping system. But sooner or later all Control systems will implement a similar architecture. Here at HAL software, we dont design hybrid virtualised control systems. Yet….

Acronym key;

DCS – distributed control system

PLC – programmable logic controller

PAC – programmable automation controller (used interchangeably with the term PLC)

VM – virtual machine

OS – operating system

OPCUA – OPC unified architecture

NAT – network address translation

Comments are closed.